Let there be light…

At first, the sun was the only source of light for earth and its inhabitants. Later, our ancestors discovered how to control fire, and fire-based light sources were used ever since by mankind.

Of course, fire is much more than a just light source, as it also allows cooking, creating tools, and warming the community using it. Many centuries had gone by without much change, until candles and oil lamps were developed, utilizing fire for the sole purpose of lighting. Jumping in time to much more recent history – the first gas and kerosene lamps became popular in the 18th and 19th centuries, respectively. At the beginning of the 19th century gas lanterns placed on poles were installed in the streets of major European and American cities. Yes, they were still based on fire, but much more controlled now.

The rise of electric light

The end of the 19th century marked the rise of the electric light, and especially the incandescent light bulb, falsely attributed solely to Thomas Edison. Actually, more than 20 inventors presented their versions of the incandescent light bulb prior to Edison, but Edison’s choice of materials, its use of high vacuum, and the engineered electrical circuit he presented were the best at his time, so his version prevailed.

Though more than 140 years had gone by since Edison’s invention, the incandescent light bulb properties are still considered the Holy Grail in terms of the visible part of the spectrum it emits. This is since light is only a byproduct of the bulb’s operation, which is essentially transmitting electrical current through a highly-resistant filament, thus allowing it to heat up to 3000 ºC.

Consequently, only a small fraction (several percent) of the

electrical energy is transformed into light, and the rest is dissipated as

unwanted heat.

Moreover, there is also much emitted radiation invisible to humans; hence the

luminous efficacy of an incandescent light bulb, which measures how well a

light source produces visible radiation,

is limited to 17 lumen/Watt.

Furthermore, the harsh working conditions (vacuum, high temperature) make the incandescent light bulb sensitive and limit its working lifetime (usually up to 1,000 hours). On the other hand, the radiation emitted from an incandescent lamp resembles the sun’s radiation, which is essentially just a very hot ball distanced some 150 million km from us.

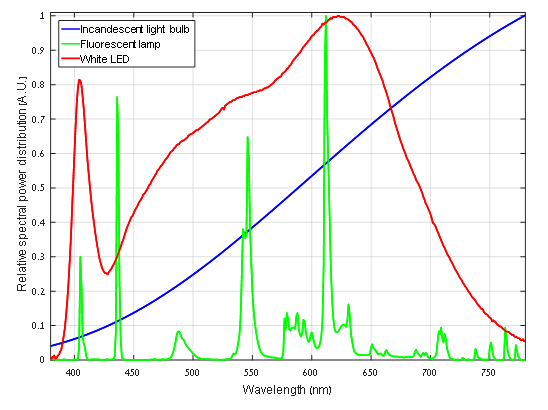

The sun, being a hot non-reflecting body at thermal equilibrium, emits light according to the physics of blackbody radiation. Looking only at the spectrum of emitted light, the difference between the sun and an incandescent light bulb can be attributed only to their different temperatures. This is why light emitted from incandescent light bulbs seems natural to us. Figure 1 includes a typical smooth spectrum of an incandescent light bulb in the visible part of the spectrum.

Next step – neon and fluorescent lights

Later, neon and fluorescent lights were invented: based on

atomic transitions in gas tubes triggered by an electric discharge, these light

sources proved to be much more energy-efficient than incandescent light bulbs

(luminous efficacy of up to 60 lumens/Watt) and even more durable (with up to

15 times longer working lifetime).

Adding fluorescent materials to the tube allowed the generated ultraviolet

radiation to be absorbed and longer wavelengths to be emitted, hence creating a

white light source. This technology allowed creating large signs for commercial

purposes and became widely used in the 20th century.

Its main drawbacks, besides fragility of the lamps and the fact they may contain hazardous elements, is that their emission spectra contain several discrete spikes in the wavelengths related to the atomic transitions (depending on the exact gas mixture), and therefore are not smooth and continuous, as can be seen in Fig. 1. This is why these light sources seem less natural for our eyes.

The LED era

And then, the LED era began. LEDs are semiconductor light sources based on Light Emitting Diodes (hence the acronym LED). Basically, an LED emits light due to electrical current that flows through it; electrons cross a junction of two semiconductor materials, recombine with electron holes and release photons with energy set by the band gap of the diode.

LEDs started to became popular in electronics equipment in the

1960s, but at the beginning they emitted light only in the infrared region,

invisible to the human eye.

Later, red LEDs were invented, and they were used mainly as indicators in

electronic devices. It took more than 30 years until scientific breakthroughs

enabled this technology to be used for general lighting purposes – particularly

the invention of the high-brightness blue LED, which relied on developing

advanced manufacturing processes using new materials. With powerful blue LED

sources it became possible to create white light sources, using a similar

concept to the fluorescent lamp – by adding

a material that absorbs blue light and emits light at longer wavelengths, creating

a white-light source that is suitable for lighting. The three Japanese

inventors that promoted the development of the high-brightness blue LED, who

worked in two independent research groups, received the 2014 Nobel Prize in

physics for their discoveries.

And now what?

Nowadays, LED lights are everywhere! The LED market is expected to grow by an order of magnitude in the decade that will end in 2023, and incandescent light bulbs will soon become history, as more and more countries prevent their sale due to their inefficiency.

How was this new kid on the block able to defeat a technology that has been with us for a century and a half? First and foremost – current LEDs have luminous efficacy of up to 300 lumen/Watt and long lifetime – 18 times more than an efficient incandescent light bulb. Moreover, many LEDs can be assembled together with the proper electronics and optics to form LED luminaires that can light everything from small rooms to giant stadiums. Furthermore, LEDs have short warmup time, can be easily dimmed, are much more shock resistant and are less sensitive to rapid on/off cycles.

LEDs are expected to dominate the lighting industry completely and now they are even used for indoor farming purposes (albeit plants have different spectral preferences, so the LEDs used for indoor horticulture emit radiation mainly in the ultraviolet and infrared parts of the spectrum).

It may seem that the LED is a flawless lighting solution, but it has its disadvantages as well: first, the white LED spectrum contains much more ultraviolet light than an incandescent lamp, which may cause some more risk to the eyes and skin. Moreover, an LED’s white light spectrum looks much more natural compared to fluorescent or neon light, but it typically contains a peak around 405 or 450 nm (violet or blue) and cannot compete with the smooth incandescent light bulb spectrum. This effect can be seen in Fig. 1..Lastly, an LED may flicker, i.e., change its brightness rapidly in time, which can affect our brain in some circumstances, depending on the frequency and the magnitude of this phenomenon. So, LED lights may not be perfect just yet, but extensive research and development efforts are improving their qualities at an ever-growing pace.

Since the incandescent light bulb was considered the standard illuminator for more than a century and due to the natural light it emits, researchers have developed various metrics and standards in order to quantify the light emitted from other lighting technologies compared to natural light. A few important ones are:

- Correlated color temperature, which estimates the closest blackbody radiation temperature that mostly resembles a non-incandescent light source

- Color rendering index, that measures the ability of a light source to reveal the colors of a given illuminated object compared to an ideal light source

- TM-30-18, which is an advanced standard for evaluating light sources’ fidelity and saturation levels, that also includes graphic representation of the results

- Flicker index and percent flicker, that measure the part of the waveform that is above the average light level and the maximal waveform amplitude variation, respectively.

Leave a Reply

Your email address will not be published. Required fields are marked *